2021-2022

The Aesthetic of the Crowd

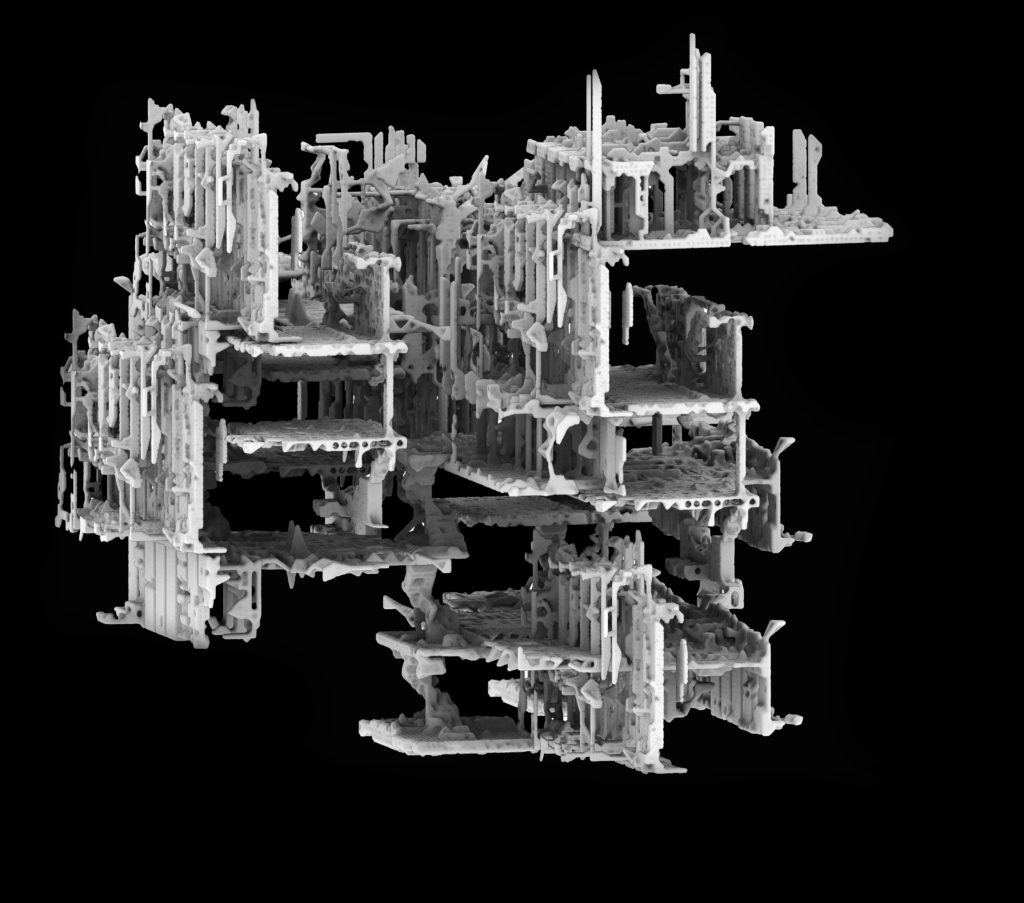

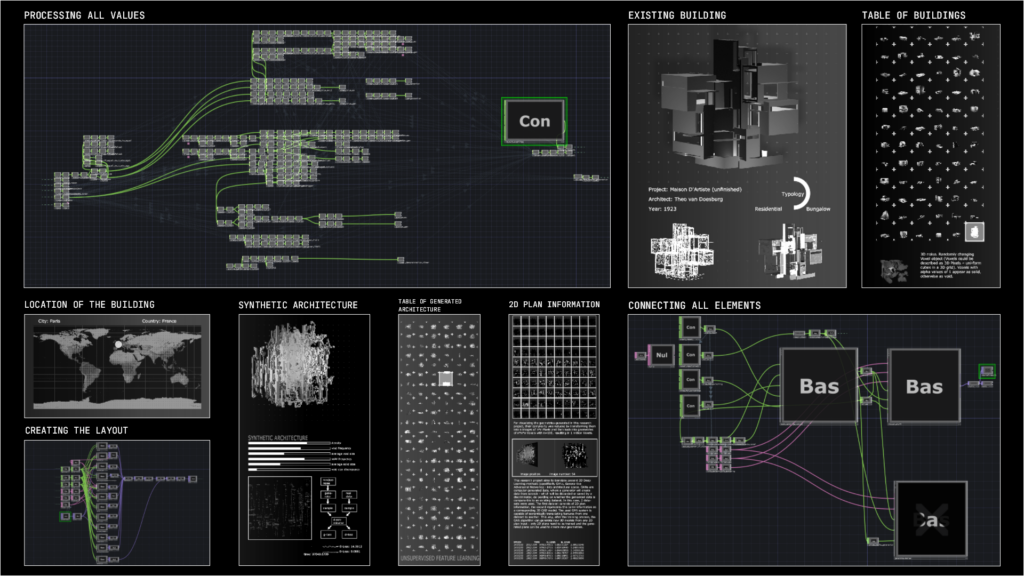

The aesthetic of the crowd describes the visual collaboration between the human crowd and the machine, through the investigation, translation, and transformation of the machine’s vision. The multimodal and immersive work describes a reality in which the limits of the design creation of the individual are overcome through the exploration of the deeper meaning of the reciprocal relationship of the collective and the machine. It is not an individual who curates the dataset, but the crowd. AI is being used to rethink participatory design.

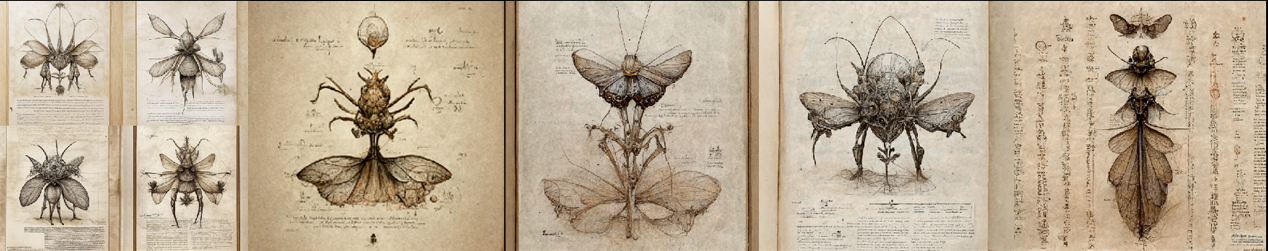

The human vision of the many meets the machine vision, which is equivalent but fundamentally different. The Aesthetic of the Crowd reflects on how immersive technologies and machine learning can be used to expand the human senses and foster new authoring formats, empathy, and cohabitation. The artwork collides the human and the non-human, the visible and invisible, thereby creating new spatial constellations of cohabitation of human, machine, and other species formed through the meta domain of a new formal language. The artwork points to a possible future in which the clear boundaries between human and the other might blur to make way for a new many-species society.

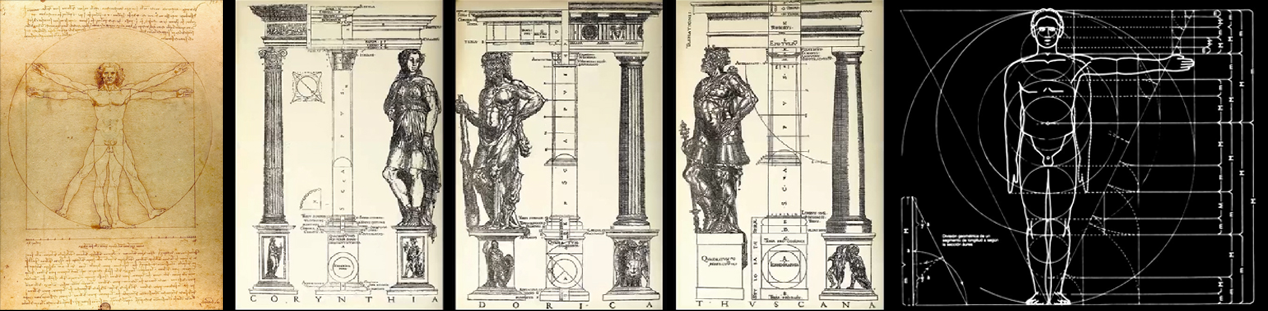

Since Vitruvius archtecture has been closely aligned to human measurement, precisely to one type of body—male and white—is proposed to represent all people. These standards are deeply ingrained in modern architecture, in proportions and ideas of space and in the laws that control them.

Inspired by Jorge Luis Borges’ Imaginary Beings (1957), the project intends to expand the anthropomorphic analogies inscribed in architecture. In projecting the future this work makes use of Al algorithms to imagine beings of the Not Yet known to look beyond the human scale.

The series of hybrids of non-human and human beings established a continuously changing proportional system. Thereby they redraw the limits of the discipline, hybridizing them with others that, until that very moment, had no connection with.

Credits

Ingrid Mayrhofer-Hufnagl, Benjamin Ennemoser, Lorenz Foth

Part of the research project on 3D GANs supported by the Early-Stage Funding by the vice-rector of research, University of Innsbruck